Digital

modernization

projects delivered

Reduction in

regression testing

times

Reduction in

Quality Engineering

costs

Zero

Complex Functional Requirements:

The app needed to support symptom tracking, medication adherence, trigger management, and integration with wearable devices, making functional testing critical.

User Experience Optimization:

Many patients with chronic conditions are not highly tech-savvy, so the app needed to be intuitive and easy to navigate for users of all ages

Performance and Compatibility:

The app had to perform seamlessly across different devices (smartphones, tablets) and operating systems (Android, iOS), even under varying conditions such as low-battery or offline use

Data Security and Compliance:

Given the sensitive nature of healthcare data, the app needed to comply with HIPAA and other relevant regulations to ensure patient privacy and data security.

Real-World Usability:

The app had to be tested with real patients to ensure it met their needs and provided tangible benefits in managing their conditions.

Accelerate

time to market

Improve cost

efficiencies

Improve ROI for

investments made

in innovation

Query Understanding

This facet of quality engineering of AI deals with assessing the chatbot’s ability to accurately interpret and classify user queries, forming the foundation for delivering accurate and personalized responses.

Information Retrieval

Harnessing RAG, the effectiveness of the AI chatbot’s responses depended on its ability to effectively retrieve contextually relevant information from its knowledge base.

Response Generation

The final response generated through the interaction of RAG and LLM models required thorough evaluation to assess its accuracy, quality, and relevance to user queries.

Conversation Flow

Along with its responses, the AI chatbot was expected to maintain a natural and engaging flow, aligned with the brand’s customer-centric tone and style of communication.

Error Handling

To ensure optimal performance, the AI chatbot needed to be tested for managing exceptional cases, involving unexpected inputs, ambiguous queries, and out-of-scope requests.

Integration with Insurer’s Information Database

The AI chatbot’s success depended on its effective integration with the insurer database to pull and display correct data for generating relevant and accurate responses to user queries.

Data Asset Creation

This step focused on creating a comprehensive dataset to test the Generative AI-powered chatbot. To accomplish this, we created a test dataset that comprised documents with annotated data points on questions, their corresponding expected answers, and validation data created by an AI data specialist. To ensure comprehensive test coverage, we used appropriate LLM models to generate multiple variations of the initial questions.

The human-preferred validation dataset, designed to provide the guardrails for the chatbot to refine its responses, was structured into three distinct classifications:

Context Retrieval & Response Generation

The generated test data (user queries) was leveraged by an AI engineer to interact with the chatbot. For each user query, the AI engineer performed the following activities:

Test Data Preparation

Conducting the activities to test the chatbot for its retrieval and response efficiencies, the AI engineer complied the test data that included:

The evaluation phase of the AI chatbot testing involved assessing the generated responses using intelligent automation in conjunction with manual verification to ensure scalable, accelerated, and accurate quality engineering.

AI-driven Evaluation

The first step in this phase used an AI model evaluation platform to calculate the following metrics for each validation set:

Manual Validation

The AI platform evaluation was followed by manual validation from our data specialist who reviewed the results for each test case. This process involved verifying the accuracy of the AI-generated evaluation labels, ensuring the reliability of the evaluation process.

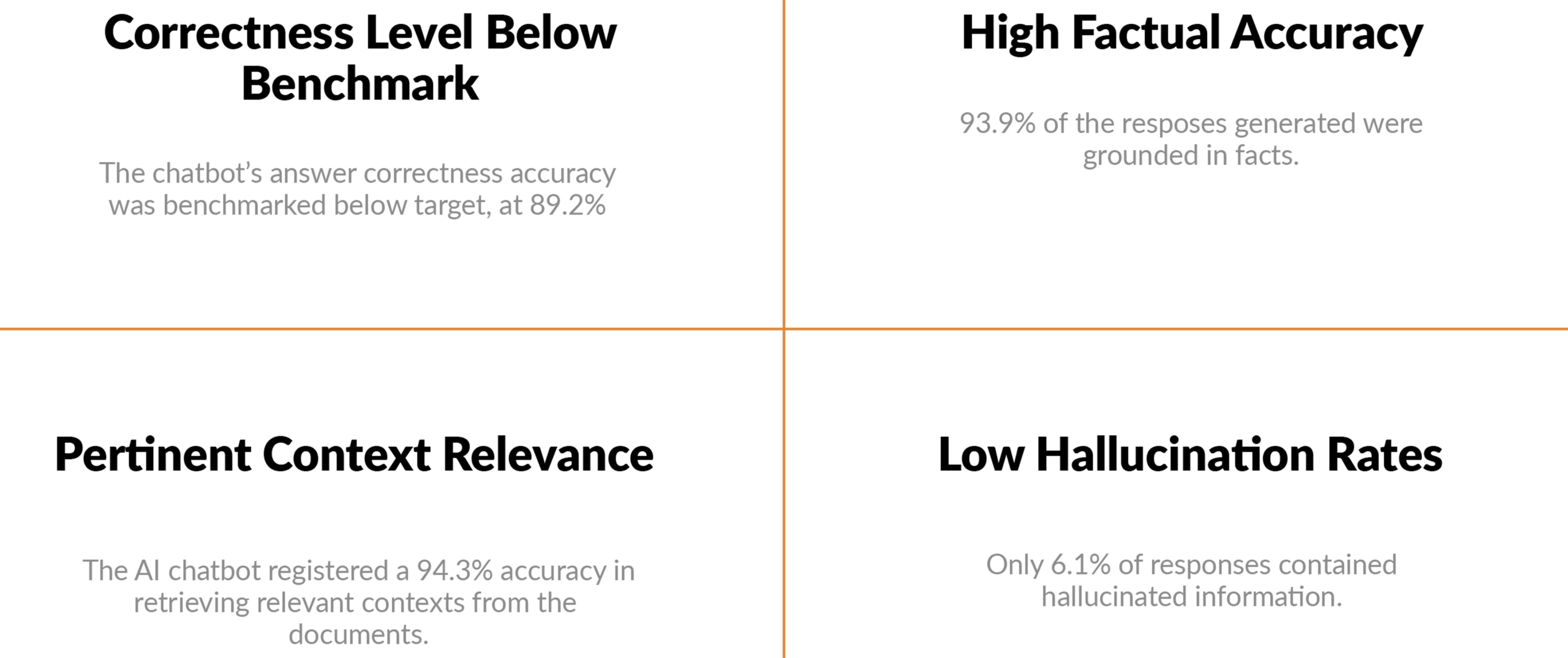

We conducted 424 tests blending positive, negative, and edge-case user queries to comprehensively evaluate the chatbot’s performance, effectiveness, and accuracy.

Combining the analysis results, we created a comprehensive test report summarizing project findings that highlighted the strengths and weaknesses of the RAG system along with actionable recommendations for improving the chatbot’s performance.

Our comprehensive report helped the insurer identify the following key details about the AI chatbot’s performance:

Based on the evaluation we made the following recommendations to our client:

This case study showcases how QK’s comprehensive and intelligent quality engineering framework empowered a leading insurance company to failproof their AI chatbot performance. By providing detailed recommendations and insights on key chatbot performance parameters, we helped the client deliver enhanced customer experiences and optimize AI deployment to meet their business goals. The success of the project underscores the need for rigorous evaluation of RAG systems before deployment.

With digital penetration skyrocketing in the Middle East, the BFSI industry continues to evolve to meet the changing demands of the digital-first customers in the region. The trend has resulted in exponential growth in digital banking services in the region, with a recent report estimating the sector to have grown at 52% between 2021 and 2023.

Our client, one of the top 10 largest banks in the UAE offering a full range of innovative retail and commercial banking services, wanted to capitalize on the exponentially growing sector in the region and proactively stay ahead of the fast-changing banking landscape. To accomplish its goal, the UAE banking giant was undertaking an IT modernization journey to futureproof its digital ecosystem for high-velocity innovation, enhanced reliability, and user-centric experiences.

Combining the trifecta of proprietary processes, expertise, and technology, QualityKiosk analyzed the bank’s requirements and established a Testing Center of Excellence (TCoE) to enable accelerated quality engineering at scale.

Leveraging an AI-first approach, the TCoE helped the banking giant:

Download the complete case study today and access the roadmap to enable AI-powered enterprise-wide testing.

With digital penetration skyrocketing in the Middle East, the BFSI industry continues to evolve to meet the changing demands of the digital-first customers in the region. The trend has resulted in exponential growth in digital banking services in the region, with a recent report estimating the sector to have grown at 52% between 2021 and 2023.

Our client, one of the top 10 largest banks in the UAE offering a full range of innovative retail and commercial banking services, wanted to capitalize on the exponentially growing sector in the region and proactively stay ahead of the fast-changing banking landscape. To accomplish its goal, the UAE banking giant was undertaking an IT modernization journey to futureproof its digital ecosystem for high-velocity innovation, enhanced reliability, and user-centric experiences.

Combining the trifecta of proprietary processes, expertise, and technology, QualityKiosk analyzed the bank’s requirements and established a Testing Center of Excellence (TCoE) to enable accelerated quality engineering at scale.

Leveraging an AI-first approach, the TCoE helped the banking giant:

Download the complete case study today and access the roadmap to enable AI-powered enterprise-wide testing.